SignSight: A Computer Vision ASL Translator

At CruzHacks 2024, our team sought to address the communication gap faced by the Deaf and Hard-of-Hearing community due to a lack of accessible resources. As six engineering students from UC San Diego and USC, we thrive in programming competitions, drawn to the time crunch and collaborative atmosphere that pushes us to tackle unique challenges and deliver an impactful product. That’s why we created SignSight, formerly known as HoldIt, a mobile phone application that interprets American Sign Language (ASL). It allows the user to record and translate someone's ASL gestures using a computer vision-based action recognition model. Through SignSight, we hope to further empower those who rely on ASL as their primary mode of communication and additionally provide an educational tool that celebrates the community.

Our LSTM model was trained on a data set of videos we recorded ourselves during our 36-hour crunch. It uses Mediapipe’s pose keypoints of joints and facial expressions as features. What sets SignSight apart is its recognition of dynamic ASL signs beyond the simple alphabet. Dynamic signs are gestures that are in motion, allowing us to classify entire hand movements and facial expressions where other products have not. This is because our model processes a sequence of frames rather than a single image for true action detection. Finally, by utilizing large language models, our model has an adherence to ASL’s unique grammatical structure compared to spoken English for a clear translation.

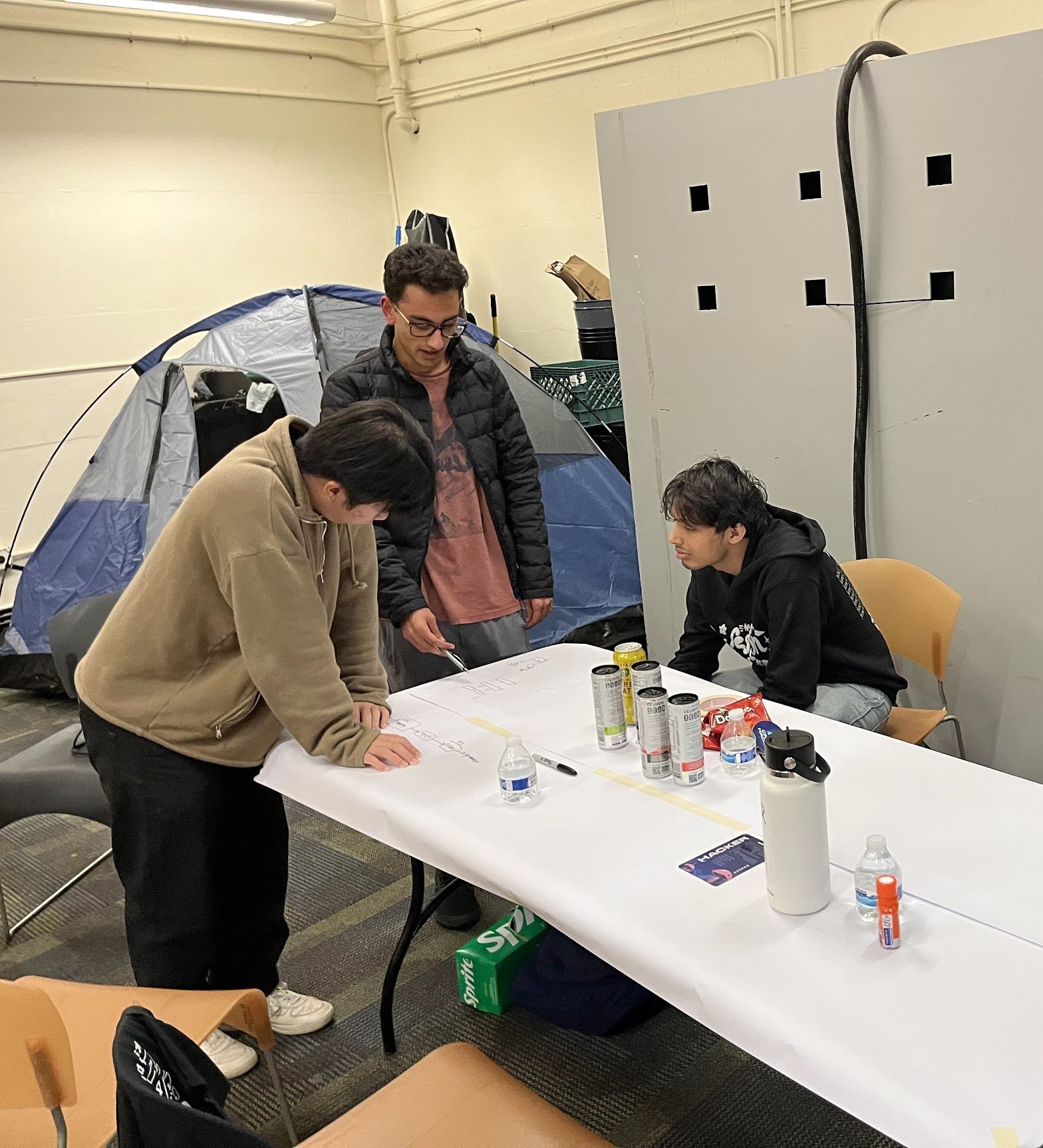

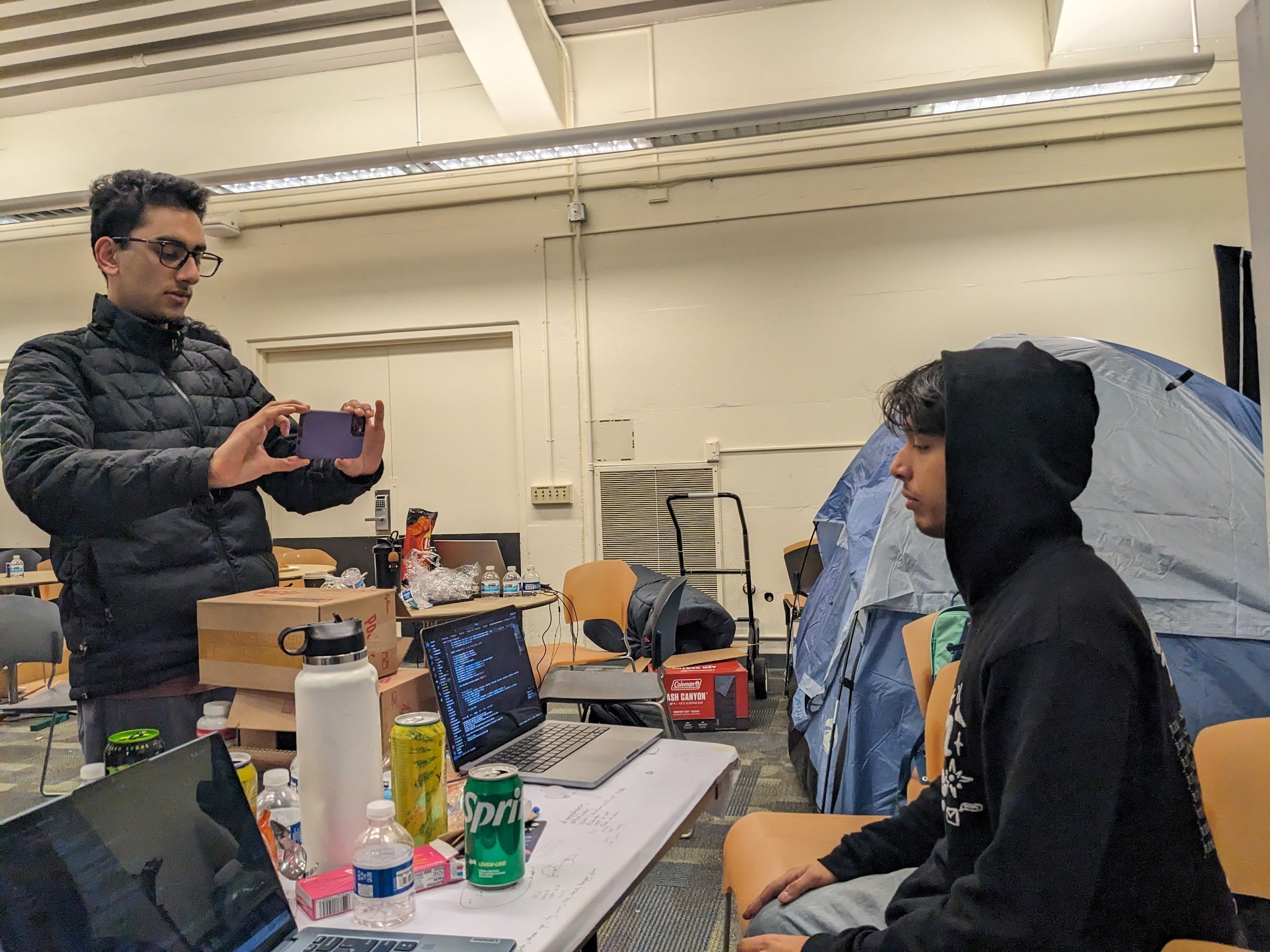

After an exhilarating day-and-a-half at CruzHacks, we earned the 1st place Health Hacks Award and the 1st place Diversity, Equity, and Inclusion Award presented by Fidelity. From sleeping in a tent in the Stevenson Event Center, rerecording our gestures hundreds of times, and digging into the complementary food, this was our most memorable hackathon experience yet. Most importantly, we are grateful to have developed our project alongside a thriving hub of budding scientists, entrepreneurs, and engineers at UCSC.

Our experience at CruzHacks was so rewarding that we are continuing to pursue building SignSight. With an expanded team, we are dedicated to continually growing SignSight by improving our model, expanding our dictionary of gestures, and aiming for a public software release. Mainly, we hope to utilize pre-trained transformers for ASL detection that outperform current convolutional and LSTM networks. Similarly to how transformer architectures revolutionized large language models, we hope to see transformer-based sequence modeling enhance ASL translation and computer vision as a whole. Ultimately, we envision a culture where point-of-sale services, lecture halls, and television programs offer machine learning-powered ASL interpretation.

With SignSight, we hope to spread our appreciation for a form of communication so integral to an entire community, allowing anyone to learn from and engage with the deaf and hard-of-hearing community. We are actively looking for insight from engineers, entrepreneurs, and hard-of-hearing individuals. You can learn more about our ongoing project or get in touch at www.sign-sight.com. Our Devpost is at https://devpost.com/software/holdit-1b2d9n.

- Shaurya Raswan, Toby Fenner, Ben Garofalo, Pranay Jha, Niklas Chang, and Kevin Yu